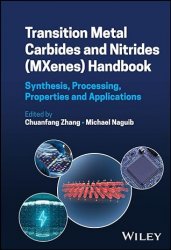

Название: Delta Lake: The Definitive Guide: Modern Data Lakehouse Architectures with Data Lakes (Final Release)

Название: Delta Lake: The Definitive Guide: Modern Data Lakehouse Architectures with Data Lakes (Final Release)Автор: Denny Lee, Tristen Wentling, Scott Haines, Prashanth Babu

Издательство: O’Reilly Media, Inc.

Год: 2025

Страниц: 445

Язык: английский

Формат: epub

Размер: 10.1 MB

Ready to simplify the process of building data lakehouses and data pipelines at scale? In this practical guide, learn how Delta Lake is helping data engineers, data scientists, and data analysts overcome key data reliability challenges with modern data engineering and management techniques.

Authors Denny Lee, Tristen Wentling, Scott Haines, and Prashanth Babu (with contributions from Delta Lake maintainer R. Tyler Croy) share expert insights on all things Delta Lake--including how to run batch and streaming jobs concurrently and accelerate the usability of your data. You'll also uncover how ACID transactions bring reliability to data lakehouses at scale.

There have been many technological advancements in data systems (high-performance computing [HPC] and object databases, for example); a simplified overview of the advancements in querying and aggregating large amounts of business data systems over the last few decades would cover data warehousing, data lakes, and lakehouses. Overall, these systems address online analytics processing (OLAP) workloads.

Data warehouses are purpose-built to aggregate and process large amounts of structured data quickly. To protect this data, they typically use relational databases to provide ACID transactions, a step that is crucial for ensuring data integrity for business applications.

Data lakes are scalable storage repositories (HDFS, cloud object stores such as Amazon S3, ADLS Gen2, and GCS, and so on) that hold vast amounts of raw data in their native format until needed. Unlike traditional databases, data lakes are designed to handle an internet-scale volume, velocity, and variety of data (e.g., structured, semistructured, and unstructured data). These attributes are commonly associated with big data. Data lakes changed how we store and query large amounts of data because they are designed to scale out the workload across multiple machines or nodes. They are file-based systems that work on clusters of commodity hardware.

This book helps you:

Understand key data reliability challenges and how Delta Lake solves them

Explain the critical role of Delta transaction logs as a single source of truth

Learn the Delta Lake ecosystem with technologies like Apache Flink, Kafka, and Trino

Architect data lakehouses with the medallion architecture

Optimize Delta Lake performance with features like deletion vectors and liquid clustering

Who This Book Is For:

As a team of production users and maintainers of the Delta Lake project, we’re thrilled to share our collective knowledge and experience with you. Our journey with Delta Lake spans from small-scale implementations to internet-scale production lakehouses, giving us a unique perspective on its capabilities and how to work around any complexities.

The primary goal of this book is to provide a comprehensive resource for both newcomers and experts in data lakehouse architectures. For those just starting with Delta Lake, we aim to elucidate its core principles and help you avoid the common mistakes we encountered in our early days. If you’re already well versed in Delta Lake, you’ll find valuable insights into the underlying codebase, advanced features, and optimization techniques to enhance your lakehouse environment.

Throughout these pages, we celebrate the vibrant Delta Lake community and its collaborative spirit! We’re particularly proud to highlight the development of the Delta Rust API and its widely adopted Python bindings, which exemplify the community’s innovative approach to expanding Delta Lake’s capabilities. Delta Lake has evolved significantly since its inception, growing beyond its initial focus on Apache Spark to embrace a wide array of integrations with multiple languages and frameworks. To reflect this diversity, we’ve included code examples featuring Flink, Kafka, Python, Rust, Spark, Trino, and more. This broad coverage ensures that you’ll find relevant examples regardless of your preferred tools and languages.

While we cover the fundamental concepts, we’ve also included our personal experiences and lessons learned. More importantly, we go beyond theory to offer practical guidance on running a production lakehouse successfully. We’ve included best practices, optimization techniques, and real-world scenarios to help you navigate the challenges of implementing and maintaining a Delta Lake–based system at scale.

Whether you’re a data engineer, architect, or scientist, our goal is to equip you with the knowledge and tools to leverage Delta Lake effectively in your data projects. We hope this guide serves as your companion in building robust, efficient, and scalable lakehouse architectures.

Скачать Delta Lake: The Definitive Guide: Modern Data Lakehouse Architectures with Data Lakes (Final Release)

[related-news] [/related-news]

Комментарии 0

Комментариев пока нет. Стань первым!